Docker Containers: A Modern Approach to Application Delivery

In the domain of software development, agility and fast operations are on the top priority list. Docker containers are a real game-changer, as they provide a base to standardized way of encapsulating and deploying the applications. This technology eliminates the environment-based inconsistencies, decreases the application lifecycle, and also simplifies the application management process.

Let's start with the basic understanding of Docker containers, their pros and their features, and how they optimize the operations in software engineering. The knowledge of data containerization can use also by the developers and IT experts to implement the new levels of flexibility and control. Furthermore, this can be used to build, send and run the modern apps.

What is Docker?

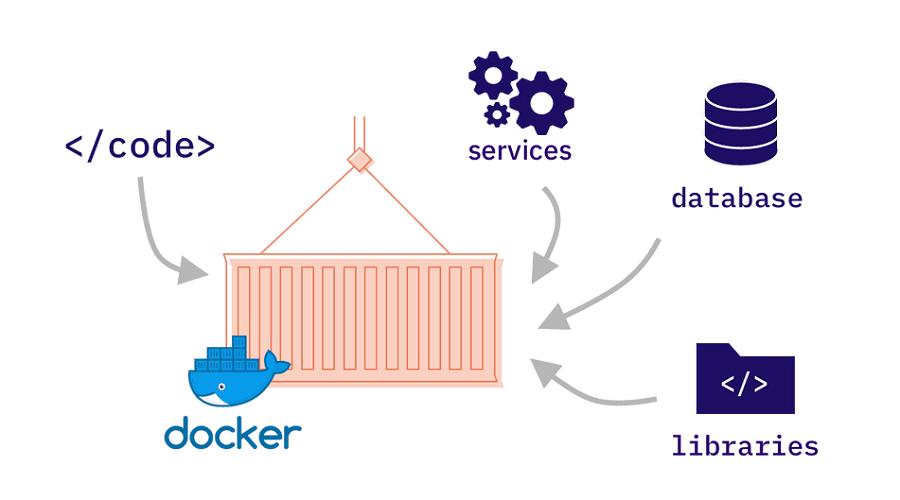

Docker is an open-source platform which permits the developer to package applications into typical portions named container. These containers wrap all the files, program code, libraries, system utilities, settings, and auxiliary programs an application uses into a single, self-sufficient package. Thanks to that particular feature, the containers as such are able to work fluently over any environment, disregarding the operating system that underlies it.

Here's a breakdown of the key aspects of Docker:

-

Containerization: Docker uses the concept of containerization. It is lightweight virtualization approach that isolates applications from the host system. In contrast to traditional VMs (VMs) which require installing a full guest operating system, containers share the host kernel and thus they are more efficient and portable.

-

Standardization: Docker offers a scenario free of variations and gives the possibility of creating an application in a consistent and standardized manner. This eliminates the challenges associated with environment-specific configurations. To ensure applications run flawlessly across development, testing, and production environments.

-

Portability: Docker containers are highly portable. Developers can build a container on one system and run it on any other system with Docker installed. While they also do not have to worry about compatibility issues. This simplifies collaboration and deployment across diverse environments.

Ready to use Docker for your projects?

Partner with VPSServer! We offer a wealth of resources and support to help you navigate the world of containerization. Explore our comprehensive Docker tutorials, guides, and documentation to gain the expertise you need.

In addition to our learning resources, VPSServer provides the perfect platform to put your Docker skills into action. Our high-performance VPS plans are optimized for Docker deployments, ensuring smooth operation and efficient resource utilization.

Take the next step towards a more agile and efficient development process. Contact VPSServer today to learn more about our Docker-friendly hosting solutions!

How do containers work and why they're so popular?

The magic behind Docker containers lies in their efficient and self-contained nature. Let's explore last few years the key factors contributing to their immense popularity:

Lighter weight

Using the kernel of the host operating system, containers enable the execution of several separate instances of applications. Containers are lightweight and efficient since they share the host OS kernel, in contrast to typical virtual machines. Because of this, containers need less resources and can be quickly spun up and down, which speeds up deployment times and improves scalability for cloud users.

Improved developer productivity

Throughout the software development lifecycle, containers offer developers a standardized environment. By packaging their dependencies and apps into containers, developers can make sure that their work on a local PC or server will function the same way in a production setting. This consistency speeds up time-to-market and increases productivity by streamlining the development process and lowering the possibility of "it works on my machine" problems.

Greater resource efficiency

Containers maximize resource consumption by using numerous isolated instances running on a single host machine. Higher-density deployments are made possible by this effective, distributed resource usage, which permits the running of more applications on the same hardware without sacrificing performance. Containers lower overhead and increase resource efficiency by doing away with the requirement for distinct operating systems for every application, which makes them an affordable option for delivering and scaling applications.

Why use Docker?

The advantages of Docker extend far beyond its core functionalities. Here's a closer look at why developers and IT professionals are increasingly turning to Docker for application development and deployment in support of cloud services:

Improved and seamless container portability

Docker containers are inherently portable. They can be built on one system and seamlessly run on any other system with Docker installed, regardless of the underlying operating system. This eliminates compatibility issues and simplifies deployments across diverse environments. Developers can build an application on the docker platform on their local machine and be confident it will run flawlessly in production environments.

Even lighter weight and more granular updates

Compared to traditional virtual machines, Docker containers boast a significantly smaller footprint. Since they share the host kernel, they only require the application code and its dependencies. This translates to faster startup times and lower resource consumption. Additionally, updates to containerized applications can be much more granular. Developers can update specific libraries or dependencies within a container without affecting the entire application, leading to more efficient deployments.

Automated container creation

Docker provides tools and functionalities for automating the creation of containers. This tool eliminates manual configuration and streamlines the development process. Developers can define the application dependencies and configurations in a Dockerfile, allowing Docker to automate the container-building process.

Container versioning

Docker allows the versioning of containers. This enables developers to track changes made to containers and easily roll back to previous versions if any issues arise. Versioning also facilitates collaboration and deployment of projects across teams, ensuring everyone is working with the same containerized data and application version.

Container reuse

The concept of container reuse is a cornerstone component of efficient development practices. Developers can leverage pre-built and tested container images for a project with common dependencies and components. This saves time and effort on the project, compared to building everything from scratch, accelerating development cycles.

Shared container libraries

Docker Hub, a public registry of container images, serves as a repository for a vast library of pre-built and tested containers. Developers can access and utilize containers built by the community for various purposes, for projects such as databases, web servers, and development tools. This eliminates the need to build common components from scratch and promotes collaboration within the developer community.

Docker tools and terms

Docker boasts the support and a rich ecosystem of tools and concepts that empower developers and IT professionals to create and manage the container lifecycle effectively. Understanding these core elements is crucial for leveraging Docker's full potential:

Dockerfile

A plain text document known as a Dockerfile is used as both a blueprint and documentation when creating a Docker image. It includes several files and instructions that detail the base operating system image, installed applications, environment variables, and additional setups needed for the application to function. This Dockerfile is used by Docker to automate the production of a repeatable and consistent container image.

Docker images

Docker image is a read-only template that carries all the components required for the application to run. It includes the application's corresponding source code itself, libraries, system tools, settings, and dependencies. Images are lightweight and portable, and they serve as the foundation for creating Docker containers.

Docker containers

An instance of a Docker image in operation is called a Docker container. The application and all of its dependencies are contained in a small, isolated environment. A single image can be used to build several containers which leads to better resource management. Containers are more efficient because they share the host kernel as compared to virtual machines.

Docker Hub

A public registry service called Docker Hub is used to store and distribute Docker images. It functions as a sizable repository library where developers can find, acquire, and add container pictures. Numerous public repositories of pre-built and tested images for different operating systems, databases, apps, and development tools are available through Docker Hub. As a result, there's no need to start from scratch, and developer community engagement is encouraged.

Docker Desktop

Docker Desktop is a simple app that lets the user to create, implement, and install Docker containers right on its local computer. It offers a nice graphic user interface (GUI) for Docker to connect with, resulting to beginner users to introduce containerization in a more convenient manner. Its Docker Desktop is compatible with Windows, Windows, and Linux systems.

Docker daemon

The background program that oversees Docker containers is called the Docker daemon, or dockerd. It builds, launches, and stops containers in accordance with user instructions and listens for commands from users via the Docker client (CLI or API). The Docker daemon is responsible for the core functionalities of a Docker container: creation, management, and networking.

Docker registry

A repository for distributing and storing Docker images is called a Docker registry. A public registry like Docker Hub is one example, but businesses can also create private registries to manage and store their own container images and restrict access. More security and control over container images used within an enterprise are provided by private registries.

Docker deployment and orchestration

Although Docker is excellent for creating and managing single containers, orchestration technologies are frequently needed for complicated application management in order to automate networking, scaling, and deployment across multiple containers. An overview of some essential tools for Docker orchestration and deployment is provided below:

Docker plug-ins

Docker plug-ins expand Docker's capability through introducing more features and functionalities. These plug-ins can be utilized for different applications like network, logging, security and storage management. Popular Docker plug-ins include Docker Volume, Docker Network, and Docker Security Suite.

Docker Compose

With just one command, multi-container applications can be defined and executed using Docker Compose. It enables developers to define, in a YAML file called a docker-compose.yml, the services (containers) needed for an application and their configuration. The process of creating, launching, and connecting the containers is then handled by Docker Compose. This makes it easier to deploy big systems with plenty of interdependent services.

Kubernetes

For large-scale deploying and orchestration of the containerized applications for an industry, Kubernetes comes on top. a free and open source all source system that automates the containers applications deployment, scaling, and management. Kubernetes provides a set of features for managing container lifecycles such as:

Automated software deployments: Containerized applications rollbacks and deployments are managed by Kubernetes.

Scaling: Kubernetes can automatically scale containerized applications up or down based on server resource needs.

Service discovery: Kubernetes facilitates service discovery, allowing containers to find and communicate with servers and each other easily.

Load balancing: Kubernetes can distribute traffic across multiple container instances for an application, ensuring high availability of distributed well.

Self-healing: Kubernetes automatically restarts failed containers and replaces unhealthy containers with new ones.

While Docker Compose offers a simpler solution for managing a few containers, Kubernetes provides a robust and scalable platform for orchestrating complex cloud deployments with numerous containerized services.

Launch Your Dockerized Applications with Confidence on VPSServer!

Docker containers offer a powerful and efficient way to package and deploy applications. But where will you run them? VPSServer provides the perfect platform to unleash the potential of Docker containers.

Here's why VPSServer is your ideal Docker partner:

Seamless Docker Integration: Enjoy a platform optimized for Docker deployments, allowing you to focus on building great software.

Expert Support: Get assistance from our knowledgeable team whenever you need help with your Docker environment.

Blazing-fast SSD storage: Ensure rapid container startup times and smooth application performance.

Get started with VPSServer today and unlock a world of possibilities for your development projects. Visit our website to explore our Docker-friendly VPS plans!

Frequently Asked Questions

What is Docker CE and how does it differ from paid versions?

The Docker Community Edition (CE) is an open-source, free version of the program that offers all the essential features for creating, launching, and controlling Docker containers.

What is Docker CLI and how to use it?

The main tool for working with Docker is the Docker CLI (Command Line Interface). It enables you to run commands for managing, building, pausing, and operating Docker images and containers. You can learn the Docker CLI commands through the official documentation or various online tutorials.

What are Docker repositories and where can I find Docker images?

Docker images are kept in storage in Docker repositories. The most well-known public repository is Docker Hub, which provides a huge selection of tested and pre-built images for different operating systems, databases, apps, and development tools. To control access to your customized container images, you can also set up private repositories within your company.

How does Docker leverage the Linux kernel for containerization?

Docker is powered by containerization technology where virtualization occurs through the provisions made by Linux kernel like isolation and resource management. As containers have the kernel as the host system, they are leaner and more efficient than traditional virtual machines that were designed to be a classical version of the user operating systems.

What are the benefits of using open-source components in Docker?

The open-source nature of Docker and many popular Docker images allows for transparency, community collaboration, and faster innovation. Open-source components also promote flexibility and customization for developers.